- You are here:

-

Home

-

Asian e-Marketing

-

May - June 2009

- Search Engine Spiders (Robots) and what you should know about them

-

Mindful Employer Branding Key to Successful Staff, HR Recruitment

In modern society, building an inclusive employer brand should be considered mission critical for businesses large and small. The most successful companies are the ones that see challenges and opportunities from many different angles, and having a diverse employee population allows you to do just that. An inclusive employer brand lets you engage, recruit, and hire a wonderful spectrum of people who can bring their varied backgrounds to bear for your business. Simply put, building an inclusive employer brand brings a diverse set of experiences and perspectives to the table, which in turn allows your company to be smarter, more thoughtful, and ultimately more successful.

-

KAWO’s "Guide to China Social Metrics" helps marketing teams translate KPIs into business success

KAWO, the leading social media management platform in China, has launched its 2023 “Guide to China Social Metrics.”

-

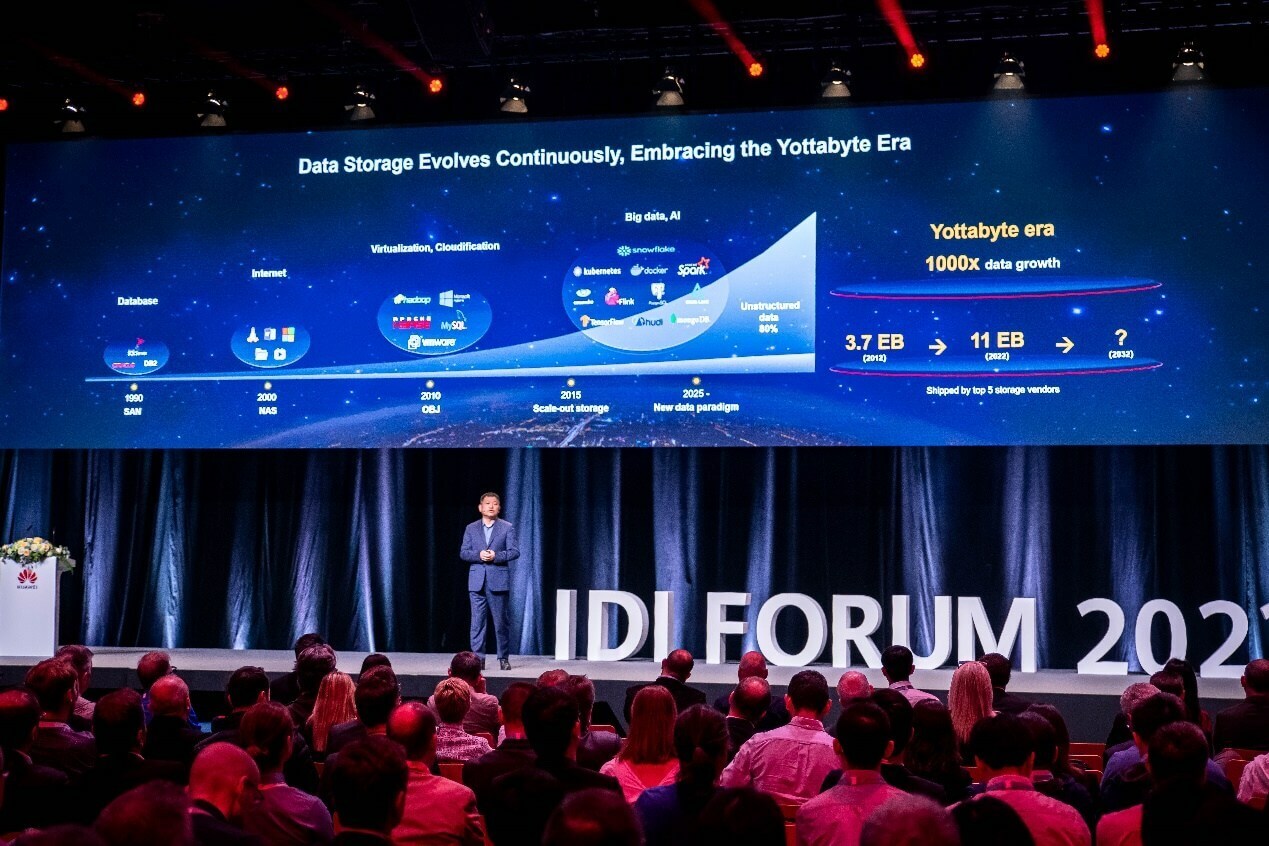

New Apps, New Data, and New Resilience: Huawei Proposes Ways of Evolving Storage in the Yottabyte Era

The Innovative Data Infrastructure Forum (IDI Forum) 2023, revolving around the theme of "New Apps ∙ New Data ∙ New Resilience," took place on May 23 in Munich, Germany. The Forum brings together global industry experts and partners to explore the future of digital infrastructure towards the yottabyte era (a yottabyte is equal to a quadrillion gigabytes).

-

New Study by MAGNA & Yahoo Urges Marketers to Pair Media Placement with Quality Creative in Order to Drive Stronger Ad Effectiveness

Media placement and creative work hand in hand when it comes to effective advertising strategies. A new study by MAGNA Media Trials and Yahoo set out to understand the role that creative quality plays in ad effectiveness, and the elements that contribute to quality creative. Creative, the Performance Powerhouse found that while media placement helps marketers find consumers where they are, creative quality was responsible for 56% of purchase intent, illustrating the strong performance of both tactics as they work together. The study suggests that marketers can greatly benefit from making small improvements to their creative in order to optimize ad performance, while also driving brand quality and trust.

-

Meltwater delivers the future of media, social and consumer intelligence through OpenAI models and advanced algorithms

Meltwater empowers companies with a suite of solutions that spans media, social, consumer and sales intelligence by analyzing ~1 billion pieces of content each day and transforming them into vital insights. Now, the company announces new AI-powered product innovations across multiple solutions that allow customers to surface insights, boost efficiency, and generate content.

-

Annual report reveals continued surge in sophisticated bot attacks

HUMAN Security, Inc., a cybersecurity company that protects organizations by disrupting digital fraud and abuse, just announced the release of its 2023 Enterprise Bot Fraud Benchmark Report. The annual report provides insights into automated attack trends across enterprise use cases, including account takeover, brute forcing, carding, credential stuffing, inventory hoarding, scalping and web scraping.

-

SenseTime Launches "SenseNova" Foundation Model Sets and AI Computing Systems, Advancing AGI Development

SenseTime hosted a Tech Day event, sharing their strategic plan for advancing AGI (Artificial General Intelligence) development through the combination of "foundation models + large-scale computing" systems. The leading AI software company is focused on creating a better AI-empowered future through innovation and committed to advancing the state of the art in AI research, developing scalable and affordable AI software platforms that benefit businesses, people and society as a whole.

-

Microsoft Cyber Signals report highlights spike in cybercriminal activity around business email compromise

Microsoft has released its fourth edition of Cyber Signals, highlighting a surge in cybercriminal activity around business email compromise (BEC), the common tactics employed by BEC operators, and how enterprises can defend against these attacks.

-

Google's approach to further reduce the security burden on users

October marks Cybersecurity Awareness Month and with online safety being top of mind, Google has launched new products and features to help people everywhere. These releases include in-built features that work around-the-clock to take the security burden off users, and updates to privacy controls that help to easily protect personal information.

- Dark web report: Users can check if their Gmail address has been exposed on the dark web as well as get guidance on how they can protect themself online by accessing the Dark Web Report in their account menu in the Google App. They just have to sign into the Google App and tap their profile picture to open the menu.

- Passwordless by Default: Passkeys are a simpler and more secure way to sign into sites online and can be used with the Google Accounts. To make transition to passwordless even easier, Google is offering the ability to set up passkeys for all users — by default.

- A new requirement to make email safer for everyone: Gmail recently announced new requirements for large senders to make email safer and more user-friendly for everyone, including enforcing authentication, enabling easy unsubscription, enforcing a clear spam rate threshold. This is in addition to Gmail’s AI-powered defenses, which block more than 99.9% of spam, phishing and malware — that’s 15 billion unwanted emails every day.

- Easier access to clear browsing data: Google added an option to quickly delete users’ browsing history in Chrome without interrupting current activities. Users just need to click the three dots in the top-right corner of the Chrome browser and select “Clear browsing data”.

- Use the Google App as the credential provider for your iOS device: Google Password Manager is built into the Google App and you can already use it to securely save your passwords and sign in faster when you’re using the app. Now, you can set it as your Autofill provider so that the Google App can help you quickly and securely autofill your passwords into any app or website on your iOS device.

Feel free to read more about the announcements in Google’s blog post.

By MediaBUZZ -

GSMA report predicts tenfold rise in 5G mobile connections in Asia Pacific by 2030 as digital transformation gathers pace

5G will account for over two-fifths (41%) of mobile connections in the Asia Pacific (APAC) region by 2030, up from 4% in 2022, according to the GSMA's Mobile Economy APAC 2023 Report.

-

SecurityHQ’s Cyber Predictions

In response to the growing number of breaches, SecurityHQ released their latest white paper to highlight analyst predictions for threats and vulnerabilities in H2 2023.

-

Black Friday Online Shopping Safety Checklist

Vigilance is urged during this 2023 Black Friday and Cyber Monday, as “AI generated scams enhance the threat to this year’s festive shoppers, as it’s revealed over 7 in 10 British people worry that AI will make it easier for criminals to commit online fraud” – NCSC.

But while AI scams like voice cloning, romance scams, and language mimicking are on the rise, “93% of the biggest spenders, millennials aged 24-35, plan to shop during this coming weekend. And they spend an average of $419.52 per person.” But with cyber security threats at an all-time high, how can shoppers and businesses stay cyber safe?

Here are our top tips for staying safe online, and the preventative measures that can be taken while shopping for your latest bargain.- Be Aware of Phishing & Quishing Attacks

SecurityHQ analysts have recently observed a significant increase in Business Email Compromise (BEC), regarding phishing attacks containing QR code (Quishing) and captchas for credentials harvesting. Quishing attacks usually occur via the scanning of a QR code. This technique involves tricking users into scanning a QR code using a mobile phone. The QR code then redirects the user to a phishing or fake website that aims to steal their credentials.

Read more about Quishing, and how to spot QR Code vulnerabilities, here. - Read the Small Print

If something seems too good to be true, it probably is. While Black Friday deals can offer huge discounts that are genuine, people still need to make money. Anything ridiculously cheap is a red flag.

What to look for:

- It is worth checking the reputation score of retailers to determine if that retailer can be trusted.

- A website with no company address, descriptions or specifications on items are all red flags. Look for the details. And do not base purchases solely off star ratings, as these can be fake.

- Pop-ups that offer free electronics are obvious scams, containing malicious phishing links, and should be avoided at all costs.

- Read the small print. Often cons are perfectly visible if you know what to look for. Like seeing a picture of a laptop being advertised, going to buy said laptop for a reduced rate without reading the small print, and receiving a literal picture of a laptop in the post. The devil is in the detail.

- Use Reputable Websites/Companies

Tried and Tested – Using websites that are globally known is a good way to avoid any nasty surprises. Even if it is a couple of pounds more, it is worth knowing where your money is going and that your purchase will be tracked and delivered.

Use Antivirus Software that will warn you of potentially dangerous sites in search results as well.

Look For Suspicious Emails, as well as suspicious calls and text messages. Never click on a link you are unsure of, and never provide personal information over the phone. Read more on email security, here. - Stop, Look, Check, Pay

Secure Sockets Layers (SSL) are used to ensure data is encrypted before being transmitted across the web. It is also an indication that an organization has been verified. Keep an eye out for HTTPS in the address bar rather than HTTP, as this highlights a site uses SSL.

Make Sure the Website That You Intend to Shop on is Not a Copy of a legitimate one. Verify that the date and name of the organization are consistent with the site you are visiting. And look for typos in the URL. Your best bet it to go directly to the website yourself, and do not access it through links on other sites/emails.

When using public Wi-Fi, use a VPN as the most effective way to stay safe and so that hackers do not steal your personal data while you are on an unsecure network. - Check Your Bank Account

- Use a credit card or payment method which offers protection (i.e., PayPal).

- Check your accounts regularly for fraudulent activity.

- Only provide enough details to complete your purchase (no extra details required)

- Keep Your Passwords Safe & Don’t Use Default Credentials

Default credentials used by applications and appliances are often published on the internet. This can be a big problem. An attacker will typically first scan your network to see where they can move next. If an attacker was lucky enough to identify applications or appliances with default credentials enabled, it won’t take them long to hunt on the internet for these published credentials. Read how to detect default credentials, here.

Finally, keep your passwords safe. Read this blog on password protocols to learn more. Don’t let cyber scams ruin your festive fun this winter!

By SecurityHQ - Be Aware of Phishing & Quishing Attacks

-

Strategic merger of three digital technology firms in Asia

Three prominent digital services companies in Asia – Digile Technologies, Reveron Consulting, and Innopia Global – have merged to create a digital services powerhouse with a formidable presence in the Asian region.

Understanding the basic structure of how search engines register Web sites is crucial to succeed in search engine optimization (SEO). It is one of the most effective web site promotion techniques, referring to improvements and changes made to web pages so that they conform to the search criteria utilized by search engines to rank and position listings.

Understanding the basic structure of how search engines register Web sites is crucial to succeed in search engine optimization (SEO). It is one of the most effective web site promotion techniques, referring to improvements and changes made to web pages so that they conform to the search criteria utilized by search engines to rank and position listings.